The problem began suddenly, and right in the middle of the COVID-19 shutdown in May 2020.

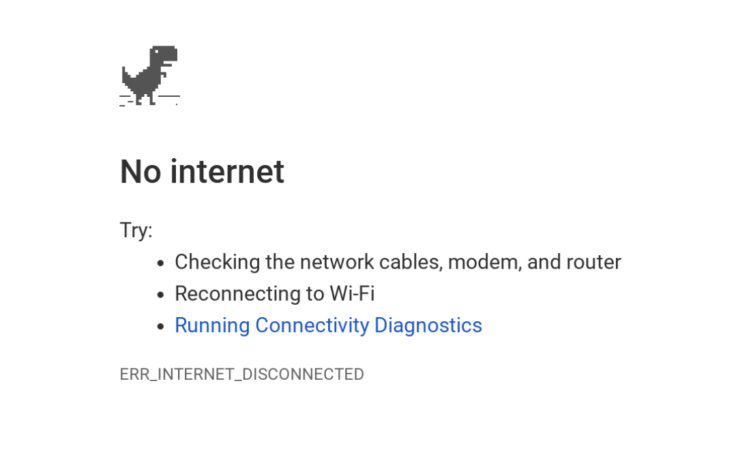

We received a help desk support request from the client, who reported that the company’s employees – most of whom were working from home due to COVID-19 – were frequently losing their remote connection to the office server.

Employees were being forced to reconnect every few minutes, and had all but lost their ability to remotely access files stored on the company’s local on-premises server.

User productivity was SEVERLY impacted on a company-wide basis. This was an URGENT level support request!

Employees Losing Remote Access to Office Server

We began troubleshooting the problem immediately. The symptom of the client’s main office Internet connection appearing to go offline pointed to a problem with either:

- the client’s Internet Service Provider (ISP), or

- the client’s main router/firewall device

We contacted the ISP (who did tests on their end, and told us that their connection and modem was OK), and the client also did a basic modem power cycle and firewall restart (turned the devices off, turned them back on) but the Internet problems continued.

As our ability to remotely troubleshoot was also impacted by the problem, we quickly determined that an on-site visit to the client’s office location would be necessary, so we scheduled an emergency support visit.

Emergency On-Site Troubleshooting

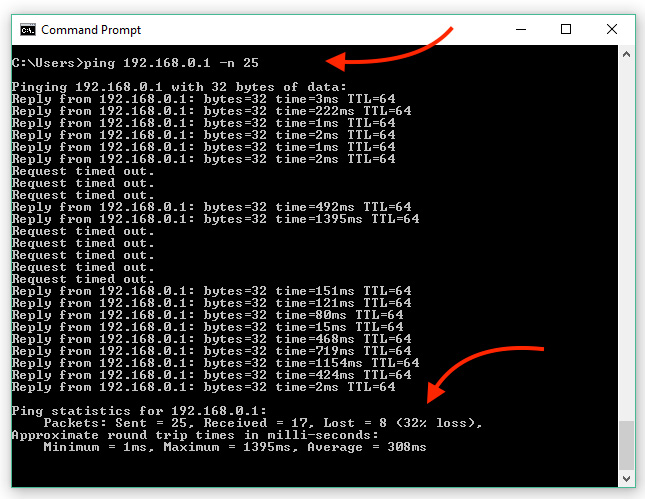

We arrived on-site that same day and got right to work. Internal tests on the network confirmed that the client’s router/firewall device appeared to be going offline every few minutes:

This was an unexpected test result, and it indicated that we were not dealing with a common Internet outage.

After consulting with a colleague, we determined that the firewall/router device could be failing, and should be replaced. We ordered the replacement hardware, and the item shipped next day and was installed.

Shockingly, the problem persisted even after the new router/firewall was upgraded. What could be causing this problem???

Something else appeared to be knocking the whole network offline – and it wasn’t due to a failure of the ISP modem or the (new) router/firewall.

(The client at this point had been experiencing over 24 hours of near total network down-time, and needed a solution quickly)

Isolating Local Devices on Network

The working theory at the time was that an unidentified device connected to the network might be causing the problem – but what was the device? And what port was it connected to? Where was it physically located?

To test this theory, parts of the network needed to be disconnected, one at a time, to isolate the problem. However, due to the fact that the client had not yet upgraded their old unmanaged switches, this turned out to be a very manual (and time-consuming) process.

The next step literally involved unplugging 48+ cables (and then re-plugging them one at a time) to continue isolating the source of the problem. One of the cables, when disconnected, resulted in the network being stable – the Internet outage problem stopped!

Yet, the burning question remained: what was on the other end of that cable? What was the mystery device that appeared to be causing the WHOLE network to fail?

Because the client had not yet upgraded their switches to newer “smart” switches (which automatically collect useful information about all devices connected to the network), we had no way to identify the problematic “mystery” device.

Although the main problem had been resolved (the Internet connection was again stable) and the client was no longer in “Emergency Mode”, we still needed to find the source of the problem to ensure it would come back to haunt us. So the next step was to replace the switches.

Identifying the Problematic Mystery Device

Several days later, after installing two new managed 48-port switches, we finally had the ability to pinpoint specific devices on the network with our network management software.

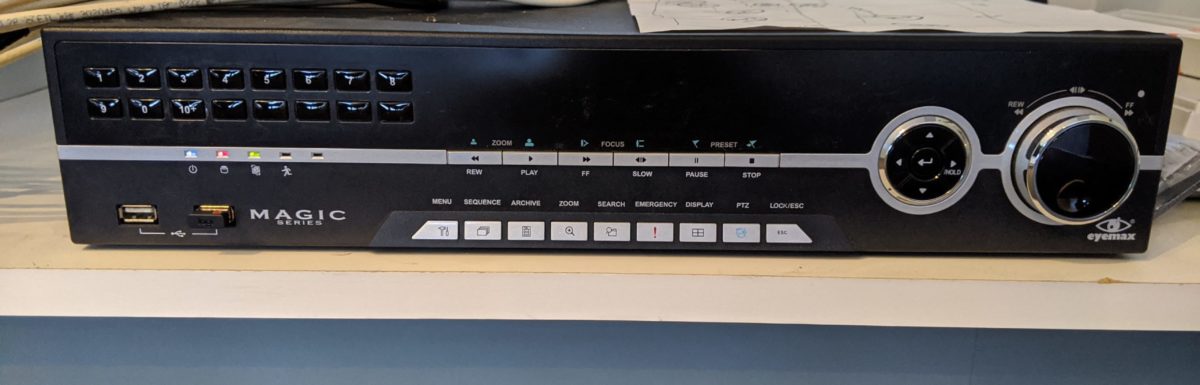

Very quickly, we identified the “mystery device” on the other end of Port #26 on one of the switches: the device had a MAC address that identified it as being manufactured by a company named “EYEMAX”.

Cross checking the device IP against our database, the device was finally identified:

It was the client’s old camera DVR system! Disconnecting the DVR from the network resulted in 100% Internet connection stability once again.

Camera System DVR: HACKED

However, the problem of the DVR remained: since the DVR system had been disconnected from the network and Internet, the company CEO was no longer able to view footage remotely from his smartphone (the cameras and recorder continued to function).

We still needed a way to get the DVR working that would NOT result in crippling the entire network, so we contacted the DVR vendor.

Several calls with the vendor revealed that the DVR system had likely been HACKED. A senior engineer at the DVR vendor explained that he had seen this exact issue happen with many of their other customers.

The engineer went on to say that the hackers had been able to actively exploit this particular DVR system to attack thousands of vulnerable business networks, and recommended several “fixes” to the old system that he claimed would work.

The vendor engineer’s insight was surprising, to say the least – and it explained everything.

However, his suggested “fix” was dubious and not guaranteed to work, so we recommended to the client the safest course of action: replacing the compromised DVR with a newer (and more secure) system, which they did.

The Internet was now working, and the company had a new camera recording system that was secure. Huzzah!

Additionally, with the upgraded network switches we had installed, we gained the capability to catch and quickly resolve similar problems in the future.

Analysis: We Got Lucky

While it’s very likely the hackers did successfully compromise the old DVR system (as the vendor engineer claimed) it appears that the attackers actually broke the device in the process.

Thankfully, all signs pointed to the hack failing to achieve it’s goal. Instead, it seemed like the hack “only” resulted in bricking the DVR device and causing an Internet-crushing network broadcast storm on the client’s network.

The attackers accidentally destroyed the very device they were attempting to use as their “point of entry” into the network, and in the process ended up sabotaging their own operation – which could have resulted in a serious data breach and harm to the client’s business.

In other words, we got lucky.

Conclusion

While this down-time incident ended up being extremely disruptive to the client’s business, the silver lining here is that no permanent damage was done – and the client’s network was upgraded to be faster and more secure as a result.

If there’s a moral to this story, it’s this: any business relying on old, insecure hardware and software is at increased risk for serious down-time and data loss.

Don’t wait for a disaster like this to strike. If your company is running on “legacy” (OLD) systems and you need small business cyber security help, feel free to contact us.